Figure 5 from Sticker: A 0.41-62.1 TOPS/W 8Bit Neural Network Processor with Multi-Sparsity Compatible Convolution Arrays and Online Tuning Acceleration for Fully Connected Layers | Semantic Scholar

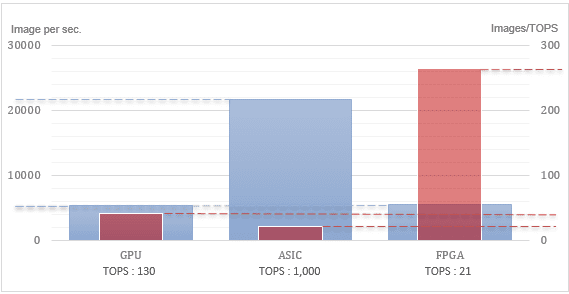

Mipsology Zebra on Xilinx FPGA Beats GPUs, ASICs for ML Inference Efficiency - Embedded Computing Design

A 0.32–128 TOPS, Scalable Multi-Chip-Module-Based Deep Neural Network Inference Accelerator With Ground-Referenced Signaling in 16 nm | Research

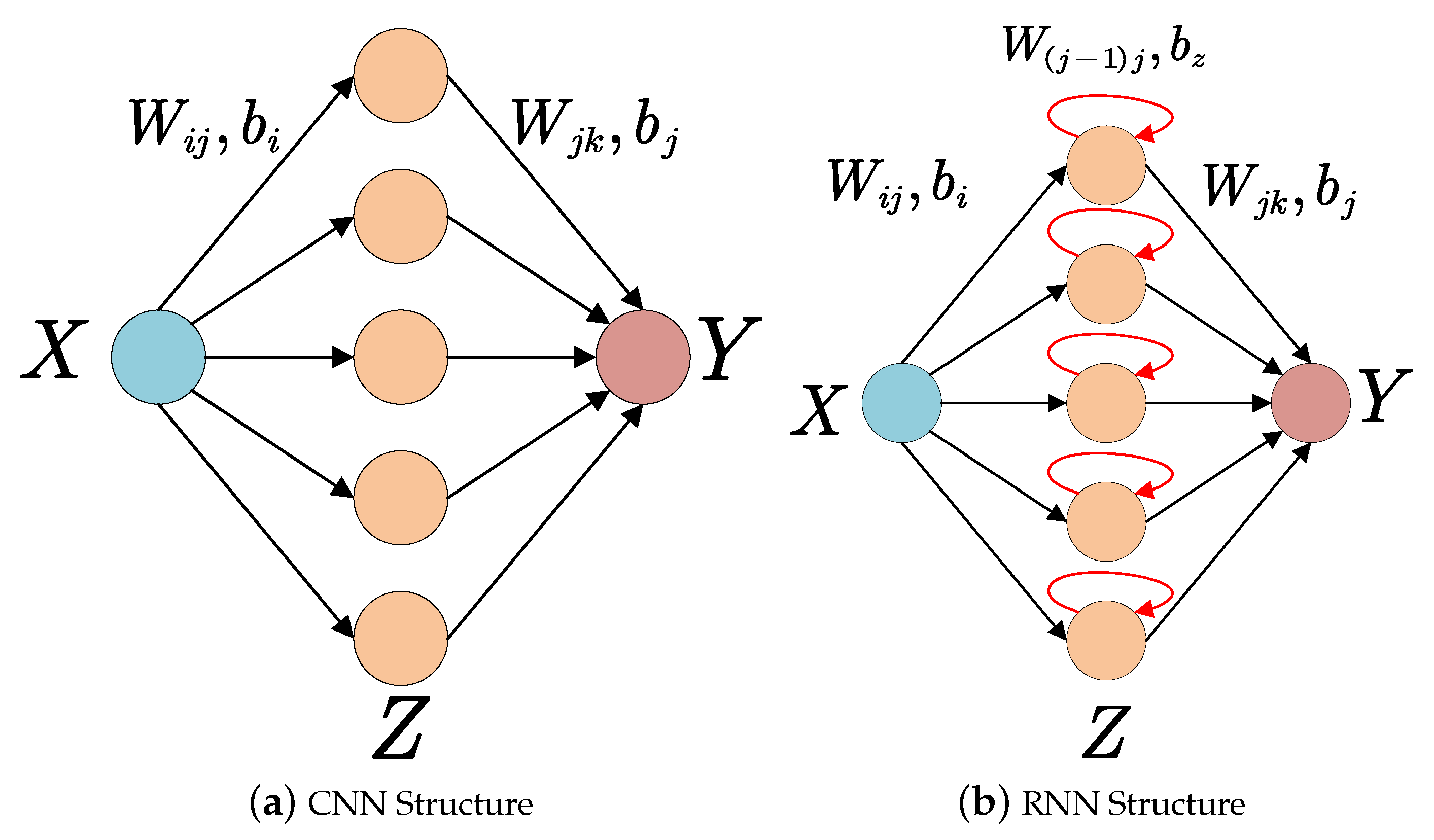

Electronics | Free Full-Text | Accelerating Neural Network Inference on FPGA-Based Platforms—A Survey

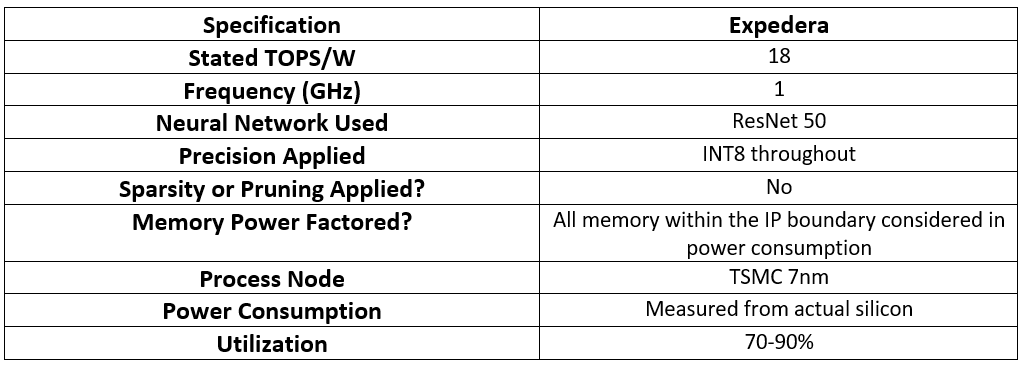

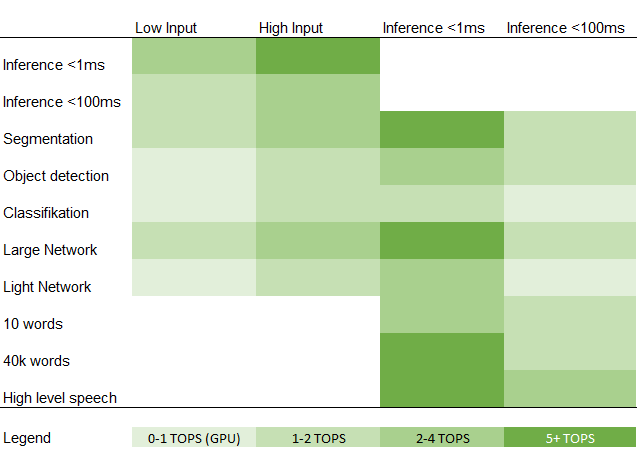

Not all TOPs are created equal. Deep Learning processor companies often… | by Forrest Iandola | Analytics Vidhya | Medium

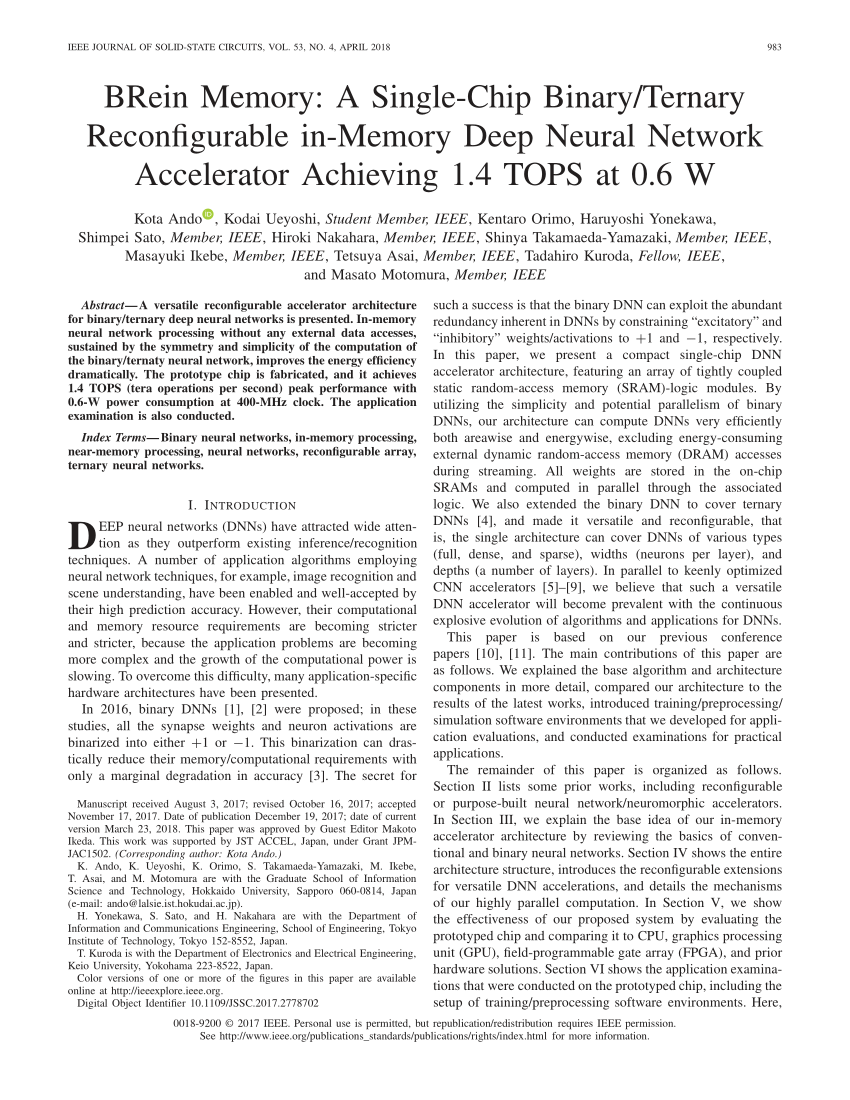

PDF) BRein Memory: A Single-Chip Binary/Ternary Reconfigurable in-Memory Deep Neural Network Accelerator Achieving 1.4 TOPS at 0.6 W

Figure 1 from A 3.43TOPS/W 48.9pJ/pixel 50.1nJ/classification 512 analog neuron sparse coding neural network with on-chip learning and classification in 40nm CMOS | Semantic Scholar

Figure 4 from A 44.1TOPS/W Precision-Scalable Accelerator for Quantized Neural Networks in 28nm CMOS | Semantic Scholar

A 1.32 TOPS/W Energy Efficient Deep Neural Network Learning Processor with Direct Feedback Alignment based Heterogeneous Core Architecture | Semantic Scholar